Searches for duplicate files on the selected drives or shares.

In this context, duplicate files are files which seem to exist more than once. Such redundant files increase the allocated space of your disks unnecessarily.

A detailed step by step example of how to use the duplicate search can be found here.

Context tab: Duplicate Search

Search Mode:

Select one of three modes of the duplicates search. You can search for duplicate files, duplicate folders, or files that do not have any duplicates.

Duplicate Files |

Searches for files that are duplicates of each other, using the selected comparison method. |

Duplicate Folders |

Searches for folders that are duplicates of each other. Two folders are considered duplicates, if they contain the same amount of subfolders and files. These subfolders and files also have to be equal to each other, in regards to the selected comparison method. |

Unique Files |

This setting searches for files that do not have any duplicates across the selected search paths. |

Comparison method:

Defines which criteria should be used to identify files as duplicates. Here is a list of the available strategies:

File Content |

This option uses MD5 checksums for comparison by default. When using this method, a so called hash value is calculated based on the contents of each file. Files with the same content will have the same hash value, files with different content will almost certainly have different values. Empty files are ignored, since there is no content to compare. This is more accurate than comparing files by their name, size and date but it is also much slower.

Within the file search options, it is possible to adjust this method to use SHA256 hashes instead. The SHA256 algorithm further reduces the statistical risk of hash collisions compared to MD5 but it is also significantly slower. This option is only visible when using the expert application mode. |

Size, Name and Date |

Select this option to identify duplicate files by looking for equal names, sizes and last change dates. This is much faster than using check sums to indicate duplicates, but it is also less accurate. |

Name and Size |

Select this option to identify duplicate files by looking for equal names and sizes. Equal to the very first compare criteria, but without regarding the "last modified" time stamp of the files. |

Name |

Select this option to find all files with equal file names. This compare type can be helpful when you are searching for undesired copies (e.g. documents which have been copied and modified locally). |

Name without Extension |

Select this option to detect files with equal names, without regarding the file extension. This can be interesting in case you are searching for duplicated backup files or e.g. row-data and compact image or video files ("MyPhoto.bmp" - "MyPhoto.png"). |

Size and Date |

Compares files according to their size and date values. This allows for a faster, but therefore less accurate search for duplicate files with different names. Accidental copies with names such as "Copy of ..." can be identified quickly, using this method. |

Size only |

Select this option to find all files with equal size. |

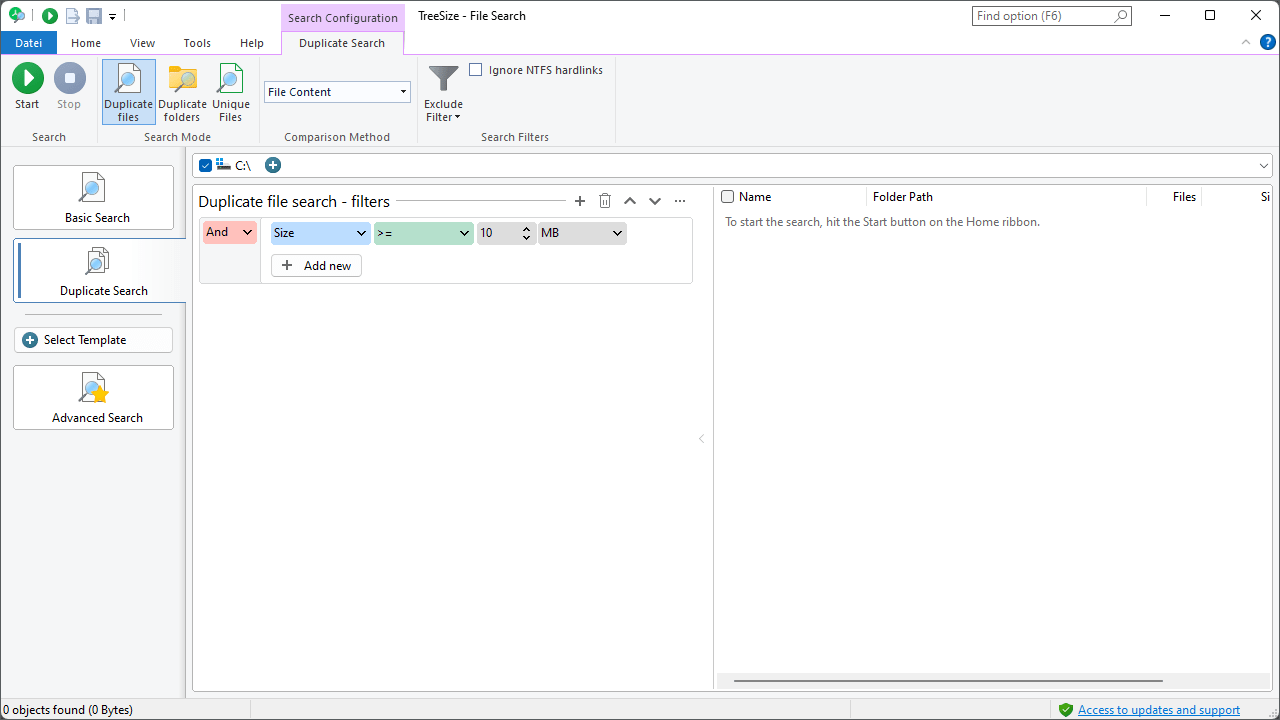

Search Filters:

Additional options to customize the duplicate file search:

Exclude filter |

Allows to activate, deactivate or customize the global exclude filters for this search. By restricting the duplicate search to a specific preselection of files, you can prevent listing files of certain directories (e.g. your local system directories) as duplicates. Additionally, this option will reduce the number of files to compare, which improves the speed of the search. |

If this option is activated, hardlinks are not regarded as file duplicates. Note: NTFS hardlinks do not allocate memory. Therefore, deleting them does not make additional memory available. In addition, TreeSize uses hard links for deduplication. |

Deduplicate:

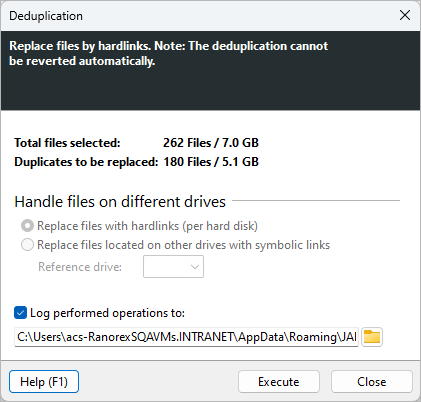

Use the "Operations > deduplicate" button to replace all but one checked duplicate files by NTFS hardlinks. You can find further details about deduplicating files in this chapter.

In the configuration window you can select a log file to log the performed replacements to. You can also define how TreeSize will handle files located on different hard disks. You can either replace files located on the same hard disk with hardlinks separately or simply select a reference drive and replace all files located on other hard disks with symbolic links. Please note that in case the permission to create symbolic links can not be granted, a Windows shortcut (.LNK file) will be created instead as fallback.

The context menu of the duplicate files list offers a feature named "Replace duplicates by hardlinks". This function works just like the "Deduplicate" function, but will handle all selected files instead of checked files.